USE CASE AI

Smarter AI, better performance per watt. Intelligent optimization that reduces energy use while maximizing system efficiency.

AI Energy intelligence for large-scale AI operations

AI training is compute-intensive, power-hungry, and growing fast. EAR helps AI infrastructure teams gain control over energy use, improve throughput, and operate within power and environmental budgets. EAR gives AI factories the visibility, control, and automation they need to scale responsibly and cost-effectively, with full-stack energy optimization that works across CPUs, GPUs, and accelerators.

- Key Benefits

Key Benefits for AI Infrastructure Teams

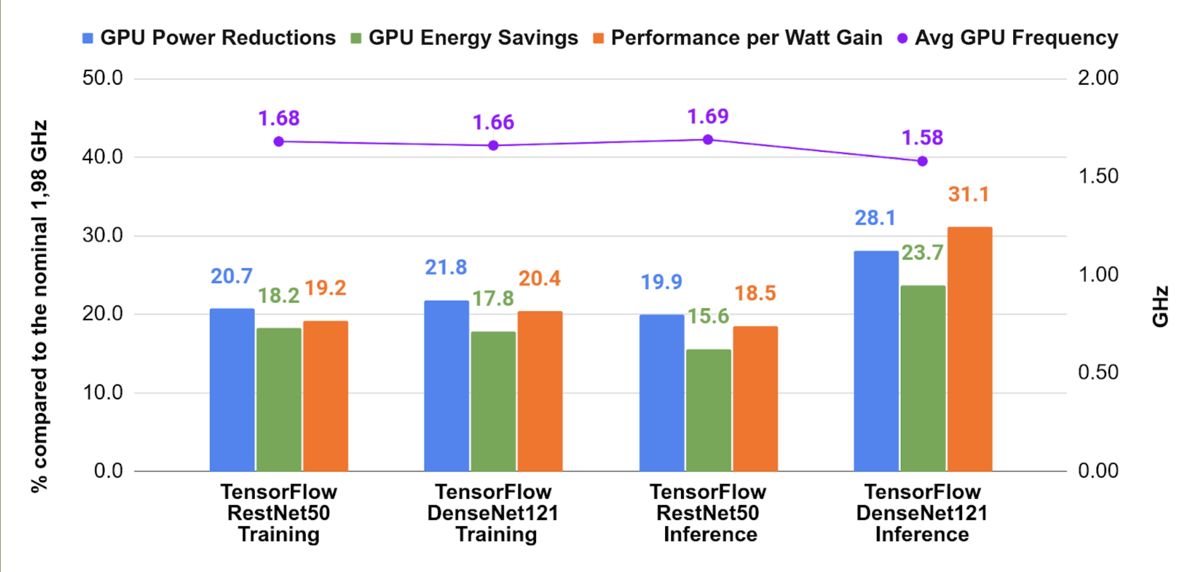

Large-scale AI workloads push GPUs and clusters to their power limits. Without visibility and control, efficiency drops and costs rise. EAR helps infrastructure teams analyze real application behavior, optimize performance per watt, and enforce power constraints automatically without modifying applications or workflows.

Improve throughput per watt

Keep up with growth

Stay within power budgets

Control energy costs

See what’s happening

Prepare for compliance

Supported Cluster Configurations

HPC Clusters (CPU - only)

AI Factories (CPU - only)

Hybrid HPC /AI (CPU + GPU)

Supported Schedulers

- Find your answer

FAQs

What’s EAR’s value for AI workloads?

EAR makes energy a first-class metric in your AI stack. It gives infrastructure teams visibility into power consumption and job behavior, and lets them enforce power and performance policies across complex, heterogeneous systems, from CPU-only inference nodes to GPU-packed training clusters.

Does EAR reduce performance?

No, EAR is designed to maximize performance-per-watt, not just reduce energy costs. In many cases, EAR allows AI workloads to complete faster within the same power envelope, thanks to better resource tuning.

What’s the ROI for an AI Factory?

AI factories running power-hungry training workloads can see positive ROI in under one year with EAR.

Example based on a 1 MW AI cluster:

- Annual electricity bill: €819,936

- EAR-enabled energy savings (20%): €163,987/year

- Total savings over 5 years: €819,936

- EAR service cost (5 years): €428,281

- Net savings: €391,655 over 5 years

That’s up to 5% net savings on total electricity spend, with better throughput and more jobs completed per node.

Is EAR compatible with AI infrastructure?

Yes. EAR supports Intel, AMD, ARM, NVIDIA, and works with SLURM, Kubernetes, and mixed workloads. It integrates easily into modern AI stacks.

Ready to optimize your data center?

EAR helps data centers, HPC facilities and AI infrastructures achieve more performance per watt by intelligently managing energy usage at runtime without sacrificing reliability or scalability.