USE CASE HPC

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

- Use Case HPC

HPC Data Centers

Optimize performance per watt across your entire HPC environment

High-Performance Computing (HPC) centers power scientific discovery, national infrastructure, and cutting-edge research. But as systems grow in complexity and energy consumption, operating within budget and sustainability goals becomes increasingly difficult.

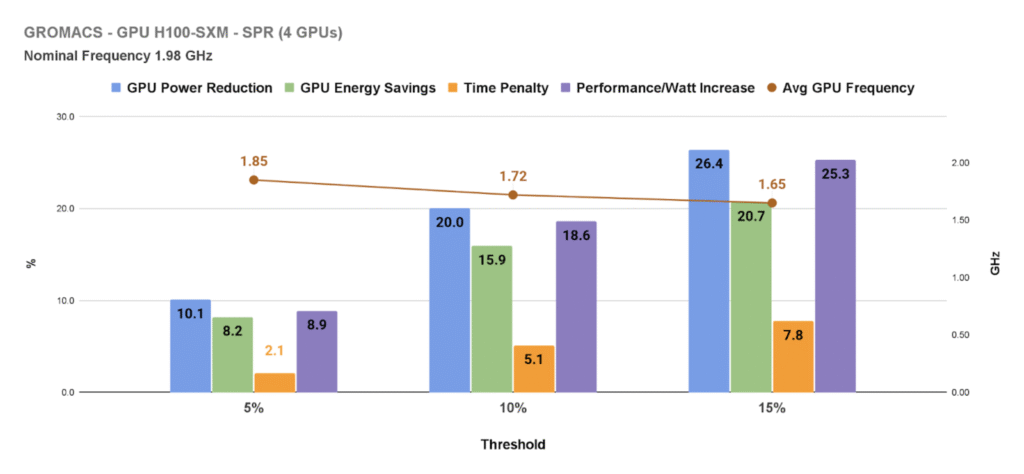

EAR helps HPC centers gain energy across workloads, improving throughput, reducing costs, and supporting long-term system health.

Deployed in leading European supercomputers like LRZ, BSC, SURF, and EDF, EAR delivers measurable impact from day one.

Key Benefits for HPC Centers

Up to 50% energy savings per job

Maximize throughput while gaining energy waste

Extend system lifespan and reduce cooling needs

Less power used = less heat generated

Enable fair and accurate energy accounting

Track energy use per user, job, or project

Comply with sustainability and power budget constraints

Meet regulatory, funding, or internal carbon goals

Fast ROI: savings from year one

High return with minimal effort or integration friction

Works with existing infrastructure

Supports Intel, AMD, ARM, NVIDIA, SLURM, and more

Trusted by leading HPC centers

Institutions like LRZ, SURF, MareNostrum 5 (BSC), and EDF use EAR to gain energy efficiency, enforce power caps, and enable energy-aware scheduling.

- How we can help

How EAR helps:

Users & Researchers

Focus on results, not tuning. EAR works transparently, optimizing your application energy without changes.

Users Motivation:

scientific results

Challenges:

- Understand application behavior and actual impact (metrics)?

- Limited skills/time/opportunity for optimizations (transparent)?

System Administrators

Get real-time insights, automated controls, and smarter support for users, without manual interventions.

Users Motivation:

keep the system running within the limits

Challenges:

- Cannot support individual users with energy optimization efforts (automation)

- Operate the system within data center power limits (hard power capping)

Data Center Managers

Stay within OPEX/CAPEX goals, report on CO₂ impact, and track ROI from energy KPIs.

Users Motivation:

maximize work output within CAPEX/OPEX budgets

Challenges:

- Understand the workloads running in the data center (analysis)

- Control energy budget (power capping)

- Charge users for energy consumption (accounting)

-

Use Cases

LRZ SuperMUC-NG

Reducing peak power from 3 MW to 2 MW with minimal performance loss on one of Europe’s most efficient supercomputers.

-

Sub Title

SURF - Snellius

Partnering to explore software-driven energy efficiency for hybrid AI/HPC systems.

-

Sub Title

BSC – MareNostrum 5

Optimizing energy usage on a 314 PFlops pre-exascale system across CPU and GPU partitions.

-

Sub Title

EDF CRONOS

Gaining visibility and control of energy use by project, code, and user across 2 MW of internal HPC compute.

- Find your answer

FAQs

Will EAR affect my scientific application results?

No. EAR is designed to preserve or improve performance, while optimizing energy use behind the scenes.

Do users need to modify their code?

No changes are required. EAR operates transparently at runtime, automatically detecting and optimizing jobs.

Can EAR support diverse HPC hardware?

Yes. EAR supports Intel, AMD, ARM CPUs and NVIDIA GPUs. It’s vendor-agnostic and integrates easily with SLURM and other schedulers.

What’s the ROI for a large HPC cluster?

With EAR, a large HPC cluster consuming 1 MW of power can reach positive ROI in under one year.

Here’s an example calculation:

- Annual electricity bill: €819,936

- EAR-enabled energy savings (20%): €163,987/year

- Total savings over 5 years: €819,936

- EAR service cost (5 years): €428,281

- Net savings: €391,655 over 5 years

That’s nearly 5% net savings on total electricity spend, while achieving more performance per watt.

Actual ROI may vary depending on electricity prices, system load, and workload mix, but EAR typically pays for itself within the first year.

Ready to optimize your data center?

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.